I Created A Laravel 10 OpenAI ChatGPT Composer Package

In my last tutorial, I created a Laravel site that featured an OpenAI ChatGPT API. This was very fun to create and while I was thinking of ways to improve it, the idea dawned upon me to make it a Composer package and share it with the world. This took less time than I expected honestly and I already have integrated my package into a few applications (it feels good to composer require your own shit!).

What’s The Benefit?

1. Reusability

I know for a fact that I will be using OpenAI in a majority of my projects going forward, instead of rewriting functionality over and over and over, I’m going to package up the functionality that I know I will need every time.

2. Modularity

Breaking my code into modules allows me to think about my applications from a high-level view. I call it Lego Theory; all of my modules are legos and my app is the lego castle I’m building.

3. Discoverability

Publishing packages directly helps my brand via discoverability. If I produce high-quality, in-demand open-source software then more people will use it, and the more people that use it then the more people know who I am. This helps me when I am doing things like applying for contracts or conference talks.

Creating The Code

Scaffold From Spatie

The wonderful engineers at Spatie have created a package skeleton for Laravel and is what I used as a starting point for my Laravel package. If you are using GitHub you can use this repo as a template or if using the command line enter the following command:

git clone git@github.com:spatie/package-skeleton-laravel.git laravel-open-api

There is a configure.php script that will set all of the placeholder values with the values you provide for your package

php ./configure.php

Now we can get to the nitty gritty.

Listen To Some Hacker Music While You Code

The Front-Facing Object

After running the configure script you will have a main object that will be renamed, in my case it was called LaravelOpenaiApi.php and it looks like this:

<?php

namespace Mastashake\LaravelOpenaiApi;

use OpenAI\Laravel\Facades\OpenAI;

use Mastashake\LaravelOpenaiApi\Models\Prompt;

class LaravelOpenaiApi

{

function generateResult(string $type, array $data): Prompt {

switch ($type) {

case 'text':

return $this->generateText($data);

case 'image':

return $this->generateImage($data);

}

}

function generateText($data) {

$result = OpenAI::completions()->create($data);

return $this->savePrompt($result, $data);

}

function generateImage($data) {

$result = OpenAI::images()->create($data);

return $this->savePrompt($result, $data);

}

private function savePrompt($result, $data): Prompt {

$prompt = new Prompt([

'prompt_text' => $data['prompt'],

'data' => $result

]);

return $prompt;

}

}

It can generate text and images and save the prompts, it looks at the type provided to determine what resource to generate. It’s all powered by the OpenAI Laravel Facade.

The Migration

The default migration will be edited to use the prompts migration from the Laravel API tutorial, open it up and replace the contents with the following:

<?php

use Illuminate\Database\Migrations\Migration;

use Illuminate\Database\Schema\Blueprint;

use Illuminate\Support\Facades\Schema;

return new class extends Migration

{

/**

* Run the migrations.

*/

public function up(): void

{

Schema::create('prompts', function (Blueprint $table) {

$table->id();

$table->string('prompt_text');

$table->json('data');

$table->timestamps();

});

}

/**

* Reverse the migrations.

*/

public function down(): void

{

Schema::dropIfExists('prompts');

}

};

The Model

Create a file called src/Models/Prompt.php and copy the old Prompt code inside

<?php

namespace Mastashake\LaravelOpenaiApi\Models;

use Illuminate\Database\Eloquent\Factories\HasFactory;

use Illuminate\Database\Eloquent\Model;

class Prompt extends Model

{

use HasFactory;

protected $guarded = [];

protected $casts = [

'data' => 'array'

];

}

The Controller

For the controllers, we have to create a BaseController and a PromptController. Create a file called src/Http/Controllers/BaseController.php

<?php

namespace Mastashake\LaravelOpenaiApi\Http\Controllers;

use Illuminate\Foundation\Bus\DispatchesJobs;

use Illuminate\Routing\Controller as BaseController;

use Illuminate\Foundation\Validation\ValidatesRequests;

use Illuminate\Foundation\Auth\Access\AuthorizesRequests;

class Controller extends BaseController

{

use AuthorizesRequests, DispatchesJobs, ValidatesRequests;

}

Now we will create our PromptController and inherit from the BaseController

<?php

namespace Mastashake\LaravelOpenaiApi\Http\Controllers;

use Illuminate\Http\Request;

use Mastashake\LaravelOpenaiApi\LaravelOpenaiApi;

class PromptController extends Controller

{

//

function generateResult(Request $request) {

$ai = new LaravelOpenaiApi();

$prompt = $ai->generateResult($request->type, $request->except(['type']));

return response()->json([

'data' => $prompt

]);

}

}

OpenAI and ChatGPT can generate multiple types of responses, so we want the user to be able to choose which type of resource they want to generate then pass on that data to the underlying engine.

The Route

Create a routes/api.php file to store our api route:

<?php

use Illuminate\Http\Request;

use Illuminate\Support\Facades\Route;

/*

|--------------------------------------------------------------------------

| API Routes

|--------------------------------------------------------------------------

|

| Here is where you can register API routes for your application. These

| routes are loaded by the RouteServiceProvider and all of them will

| be assigned to the "api" middleware group. Make something great!

|

*/

Route::group(['prefix' => '/api'], function(){

if(config('openai.use_sanctum') == true){

Route::middleware(['api','auth:sanctum'])->post(config('openai.api_url'),'Mastashake\LaravelOpenaiApi\Http\Controllers\PromptController@generateResult');

} else {

Route::post(config('openai.api_url'),'Mastashake\LaravelOpenaiApi\Http\Controllers\PromptController@generateResult');

}

});

Depending on the values in the config file (we will get to it in a second calm down) the user may want to use Laravel Sanctum for token-based authenticated requests. In fact, I highly suggest you do if you don’t want your token usage abused, but for development and testing, I suppose it’s fine. I made it this way to make it more robust and extensible.

The Config File

Create a file called config/openai.php that will hold the default config values for the package. This will be published into any application that you install this package in:

<?php

return [

/*

|--------------------------------------------------------------------------

| OpenAI API Key and Organization

|--------------------------------------------------------------------------

|

| Here you may specify your OpenAI API Key and organization. This will be

| used to authenticate with the OpenAI API - you can find your API key

| and organization on your OpenAI dashboard, at https://openai.com.

*/

'api_key' => env('OPENAI_API_KEY'),

'organization' => env('OPENAI_ORGANIZATION'),

'api_url' => env('OPENAI_API_URL') !== null ? env('OPENAI_API_URL') : '/generate-result',

'use_sanctum' => env('OPENAI_USE_SANCTUM') !== null ? env('OPENAI_USE_SANCTUM') == true : false

];

- The api_key variable is the OpenAI API key

- The organization variable is the OpenAI organization if one exists

- The api_url variable is the user-defined URL for the API routes, if one is not defined then use /api/generate-result

- The use_sanctum variable defines if the API will use

auth:sanctummiddleware.

- The use_sanctum variable defines if the API will use

The Command

The package includes an artisan command for generating results from the command line. Create a file called src/Commands/LaravelOpenaiApiCommand.php

<?php

namespace Mastashake\LaravelOpenaiApi\Commands;

use Illuminate\Console\Command;

use Mastashake\LaravelOpenaiApi\LaravelOpenaiApi;

class LaravelOpenaiApiCommand extends Command

{

public $signature = 'laravel-openai-api:generate-result';

public $description = 'Generate Result';

public function handle(): int

{

$data = [];

$suffix = null;

$n = 1;

$temperature = 1;

$displayJson = false;

$max_tokens = 16;

$type = $this->choice(

'What are you generating?',

['text', 'image'],

0

);

$prompt = $this->ask('Enter the prompt');

$data['prompt'] = $prompt;

if($type == 'text') {

$model = $this->choice(

'What model do you want to use?',

['text-davinci-003', 'text-curie-001', 'text-babbage-001', 'text-ada-001'],

0

);

$data['model'] = $model;

if ($this->confirm('Do you wish to add a suffix to the generated result?')) {

//

$suffix = $this->ask('What is the suffix?');

}

$data['suffix'] = $suffix;

if ($this->confirm('Do you wish to set the max tokens used(defaults to 16)?')) {

$max_tokens = (int)$this->ask('Max number of tokens to use?');

}

$data['max_tokens'] = $max_tokens;

if ($this->confirm('Change temperature')) {

$temperature = (float)$this->ask('What is the temperature(0-2)?');

$data['temperature'] = $temperature;

}

}

if ($this->confirm('Multiple results?')) {

$n = (int)$this->ask('Number of results?');

$data['n'] = $n;

}

$displayJson = $this->confirm('Display JSON results?');

$ai = new LaravelOpenaiApi();

$result = $ai->generateResult($type,$data);

if ($displayJson) {

$this->comment($result);

}

if($type == 'text') {

$choices = $result->data['choices'];

foreach($choices as $choice) {

$this->comment($choice['text']);

}

} else {

$images = $result->data['data'];

foreach($images as $image) {

$this->comment($image['url']);

}

}

return self::SUCCESS;

}

}

I’m going to add more inputs later, but for now, this is a good starting point to get back data. I tried to make it as verbose as possible, I’m always welcoming PRs if you want to add functionality 🙂

The Service Provider

All Laravel packages must have a service provider, open up the default one in the root directory, in my case it was called LaravelOpenaiApiServiceProvider

<?php

namespace Mastashake\LaravelOpenaiApi;

use Spatie\LaravelPackageTools\Package;

use Spatie\LaravelPackageTools\PackageServiceProvider;

use Mastashake\LaravelOpenaiApi\Commands\LaravelOpenaiApiCommand;

use Spatie\LaravelPackageTools\Commands\InstallCommand;

class LaravelOpenaiApiServiceProvider extends PackageServiceProvider

{

public function configurePackage(Package $package): void

{

/*

* This class is a Package Service Provider

*

* More info: https://github.com/spatie/laravel-package-tools

*/

$package

->name('laravel-openai-api')

->hasConfigFile(['openai'])

->hasRoute('api')

->hasMigration('create_openai_api_table')

->hasCommand(LaravelOpenaiApiCommand::class)

->hasInstallCommand(function(InstallCommand $command) {

$command

->publishConfigFile()

->publishMigrations()

->askToRunMigrations()

->copyAndRegisterServiceProviderInApp()

->askToStarRepoOnGitHub('mastashake08/laravel-openai-api');

}

);

}

}

The name is the name of our package, next we pass in the config file created above. Of course we have to add our API routes and migrations. Lastly, we add our commands.

Testing It In Laravel Project

composer require mastashake08/laravel-openai-api

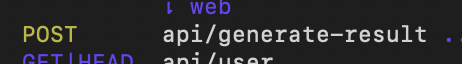

You can run that command in any Laravel project, I used it in the Laravel API tutorial I did last week. If you runphp artisan route:list and you will see the API is in your project!

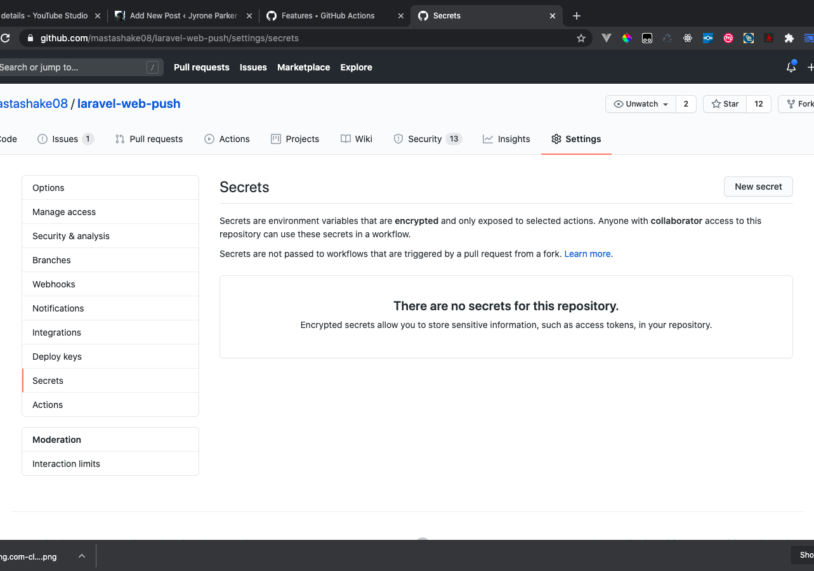

Check The Repo!

This is actually my first-ever Composer package! I would love feedback, stars, and PRs that would go a long way. You can check out the repo here on GitHub. Please let me know in the comments if this tutorial was helpful and share on social media.

Follow Me On Social Media

Follow Me On Youtube!

Get Your Next Domain Cheap & Support The Channel

I use Namecheap for all of my domains! Whenever I need a cheap solution for a proof-of-concept project I grab a domain name for as little as $1! When you sign up and buy your first domain with Namecheap I get a commission, it’s a great way to get a quality service and support this platform!

Get Your Next Domain Cheap

CLICK HERE

Join The Newsletter

By joining the newsletter, you get first access to all of my blogs, events, and other brand-related content delivered directly to your inbox. It’s 100% free and you can opt out at any time!

Check The Shop

You can also consider visiting the official #CodeLife shop! I have my own clothing/accessory line for techies as well as courses designed by me covering a range of software engineering topics.

-

Product on sale

GPT Genie: A Solo Developer’s Guide to Mastering ChatGPT$1.99

GPT Genie: A Solo Developer’s Guide to Mastering ChatGPT$1.99 -

These Fingers Unisex t-shirt$23.55 – $31.55

These Fingers Unisex t-shirt$23.55 – $31.55 -

#CodeLife AirPods case$15.00

#CodeLife AirPods case$15.00 -

Embroidered #CodeLife Champion Backpack$44.50

Embroidered #CodeLife Champion Backpack$44.50 -

#CodeLife Laptop Sleeve$25.00 – $28.00

#CodeLife Laptop Sleeve$25.00 – $28.00 -

#CodeLife Unisex T-Shirt$20.00 – $22.00

#CodeLife Unisex T-Shirt$20.00 – $22.00 -

#CodeLife Unisex Joggers$31.50 – $33.50

#CodeLife Unisex Joggers$31.50 – $33.50 -

Cuffed #CodeLife Beanie$20.00

Cuffed #CodeLife Beanie$20.00 -

Unisex #CodeLife Hoodie$36.50 – $38.50

Unisex #CodeLife Hoodie$36.50 – $38.50